August 7, 2015

Picture the brain of a child: constantly learning, accumulating and assimilating new information at lightning speed. The developing brain processes data and forms new neural networks to write data to memory.

Now what if the mind’s thought-processing capacity keeps growing, but the neural connections to memory can’t keep up? The child’s increasingly powerful brain isn’t much use without the ability to quickly store all the new ideas and information.

That is the dilemma facing the modern computing industry: lagging communication between new superfast processors and memory chips.

“Memory interfaces are already an important bottleneck in computer system performance—it’s a big problem,” says Tony Chan Carusone, a professor in The Edward S. Rogers Sr. Department of Electrical & Computer Engineering.

“Over the next five years these memory interfaces are going to need a complete rethink. We’ve already seen parallelism reduce power and improve performance for the processor’s internal architecture, and that’s the direction the memory interface is going too.”

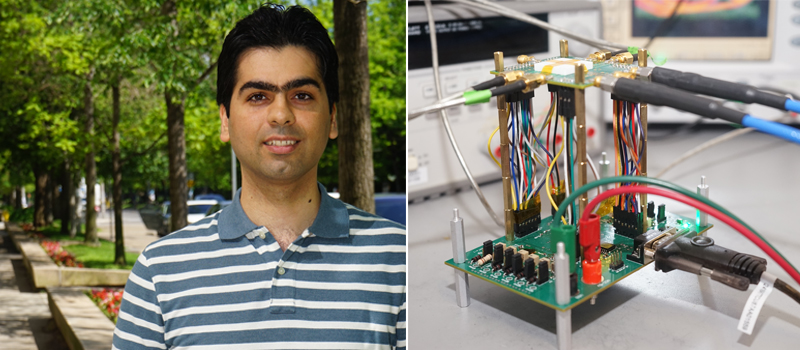

PhD candidate Behzad Dehlaghi is tackling this problem, and his proposed solution combines the best of two separate camps in industry.

The first, called wide input-output or wideIO, advocates for more wires connecting chips—Chan Carusone and Dehlaghi liken this to adding more lanes to a highway.

“But if we’re going to fit two-thousand lanes on a highway, the lanes are going to have to be a lot thinner,” says Chan Carusone. “So rather than drive cars down the lanes, they ride bikes.” Slower speeds but more lanes still amount to more data moving back and forth faster.

The second approach is to drive faster down the lanes we already have—to design rocket ships to fly down the highway. This requires engineering better transmitters and receivers to send the rocket ships flying without crashing into each other.

At first, Dehlaghi’s solution sounds impossible: he’s flying rocket ships down high-density bicycle lanes, and at low power. “We are the first ones who’ve used this technology in Canada,” he says.

With support from partners CMC Microsystems and Huawei Canada, Dehlaghi has designed chips to launch signals at extremely high speeds, 20 Gb/s per lane, and a miniature silicon layer called an interposer to connect two chips together.

“The interposer is an intermediary between the chips and the circuit board,” says Chan Carusone. “It’s a new layer that goes in between, and allows the chips to talk to each other with this tremendous density.”

“We’re also using a straightforward, inexpensive CMOS technology for the interposer,” adds Dehlagi, a crucial element to incorporating the layer into standard fabrication processes.

Their novel approach wasn’t intuitive—several packaging experts they approached about the idea said it couldn’t be done.

“It’s been a very challenging project,” says Dehlaghi. “When I talked to the companies that do the attachment between these dies, they predicted that our design had less than 40 percent chance of working…so I’m glad that it’s all working together.”

Having successfully integrated the components and tested his platform, Dehlaghi provided CMC Microsystems with a reference design for other Canadian researchers to use as a starting point.

“This was something no Canadian university had done—the papers in this area, they’re not coming from universities, they’re coming from industry,” says Chan Carusone. “So for a university to pull all this together? It’s pretty great, or pretty lucky, or both.”

More information:

Marit Mitchell

Senior Communications Officer

The Edward S. Rogers Sr. Department of Electrical & Computer Engineering

416-978-7997; marit.mitchell@utoronto.ca