September 20, 2019

Tyler Irving

Next week, two papers by a team of researchers led by Professor Parham Aarabi will be presented at the IEEE International Workshop on Multimedia Signal Processing in Kuala Lumpur, Malaysia. The first covers the use of artificial intelligence (AI) to mimic a real person in VR — also known as a deepfake — while the second provides a novel way to assess image quality in VR cameras.

Deepfakes — a combination of the term “deep learning” and the word “fake” — leverage AI techniques to combine or superimpose existing video or images onto a source video or image. The internet is replete with examples, such as a fake video of Barack Obama, voiced by actor Jordan Peele.

In order to create a deepfake, the algorithms require a large library of images or videos of the intended subject. From these, the software “learns” what the subject looks like in various poses or positions. It is then able to map this data onto a source image or video featuring a different person, creating a sort of ‘virtual costume.’

Aarabi and his team, including Joey Bose (MASc 1T8, now a PhD candidate at MILA in Montréal) filmed a stereoscopic VR video of a test subject sitting in a chair, talking about her family. They then used a library of online videos — in this case, footage of comedian and TV host, John Oliver — to make it appear as though Oliver was the one talking.

“A stereoscopic VR recording has two channels, one for each eye, but in terms of manipulation, they can be treated independently,” says Bose. “Therefore, we were able to use an off-the-shelf algorithm for creating deepfakes. The only difference is that, to the best of our knowledge, this is the first time anyone has applied this technology to VR.”

Bose and the team only manipulated a narrow square containing the subject’s eyes and upper mouth, and did not alter the audio of their deepfake — their goal was merely to demonstrate proof-of-concept rather than create a convincing deepfake.

But concerns have been raised that in the hands of more sophisticated and nefarious actors, deepfakes could be used to spread misinformation or propaganda — the Obama video above was produced as a public service announcement, warning viewers not to be fooled by deepfakes.

Aarabi doesn’t discount the need to watch out for abuses, but he believes the technology could have many positive applications. These include educational programs that could bring students to places they might otherwise never visit, medical simulations that help train doctors and surgeons, or even virtual assistants that are more engaging than the disembodied voices of Siri or Google Assistant. He also points to the ability of VR to trigger precious memories.

“Imagine if I could see someone close to me that had passed away, and feel from this VR experience that I am close to them,” he says. “To me would be a very empowering thing.”

The team’s second paper addresses camera quality. While traditional video recorders can be recorded with just one image sensor, VR cameras need at least two in order to produce stereoscopic vision. But if the cameras aren’t of high enough quality, the recording becomes pixelated, making the resulting video look unrealistic — an effect that is compounded when images from multiple sensors are stitched together via software.

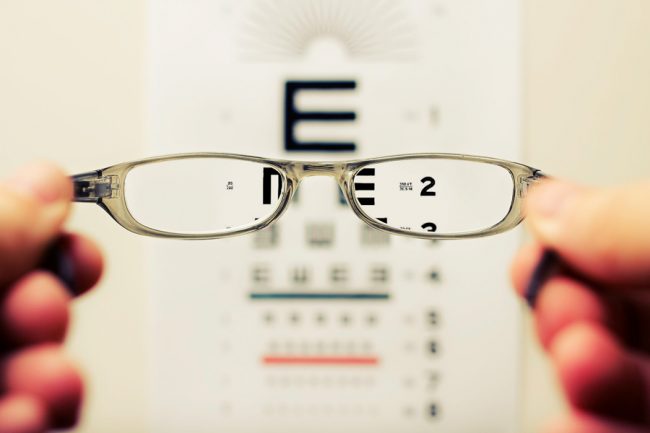

“We realized that there’s no single, universally accepted standard to measure the quality of VR videos,” says Anastasia Kolesnikov (CompE 1T8), who worked with Aarabi on her undergraduate thesis. “Then we came up with the idea of using an eye chart.”

The team obtained a copy of the LogMAR chart, a standard visual acuity test used by optometrists and ophthalmologists around the world. Fifteen subjects viewed the chart in real life, then viewed a VR video of the same chart. By comparing their scores, the researchers were able to assess the performance of both off-the-shelf VR cameras as well as those constructed by pairing two traditional video recorders.

“If you have 20-20 vision in real life but your vision is horrible in the VR space, it means the cameras did something wrong,” says Aarabi. “It’s a simple, quantifiable measure of VR quality that allows you to compare sets and cameras.”

He adds that the universal standard could help the nascent VR industry overcome some of the roadblocks to more widespread adoption of the technology.

“VR is a really impactful medium that could be applied in many of the same ways that traditional video is, and more besides,” says Aarabi. “We hope this research will reduce the barriers that are preventing it from becoming mainstream.”

This story originally appeared in U of T Engineering News.

More information:

Jessica MacInnis

Senior Communications Officer

The Edward S. Rogers Sr. Department of Electrical & Computer Engineering

416-978-7997; jessica.macinnis@utoronto.ca